-

Neural Networks: Activation Function

Let’s understand activation functions with an exciting example. Consider the following dataset. We need to build a classification model for the following problem. The above problem is a “non-linear” problem and we cannot predict a label using a model of the form One possible solution to this problem is Feature Crosses. But what if we…

-

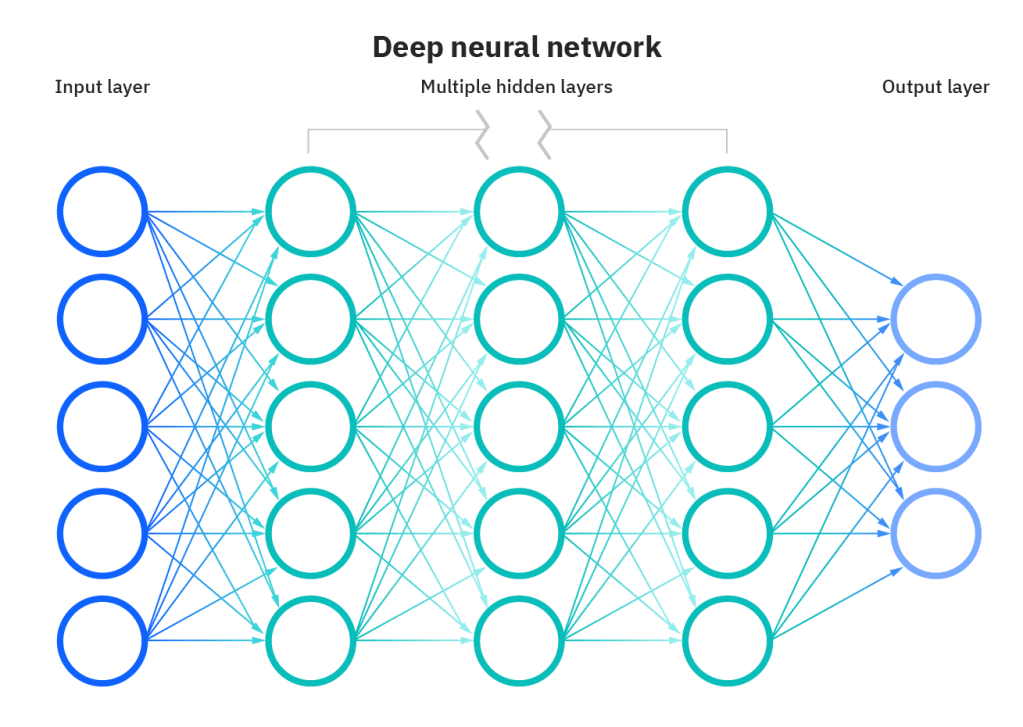

Neural Networks

Artificial Neural Networks (ANNs) are a subset of machine learning and form the base for deep learning. The name is inspired by the complex network of neurons that exist in the human brain. Neural networks are a set of algorithms that mimic the operations of a human brain to recognize relationships between vast amounts of…

-

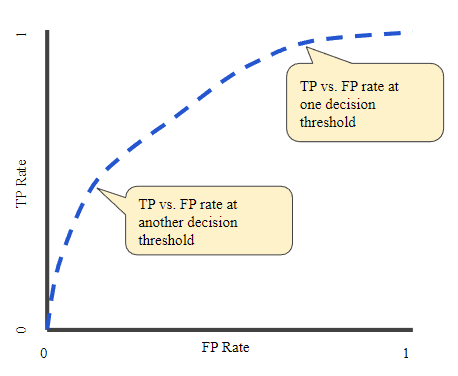

Classification: ROC Curve and AUC

ROC Curve A ROC (receiver operating characteristic) Curve is a graph showing the performance of a classification model at different threshold values. On its X-axis we plot the FPR (False Positive Rate) and TPR (True Positive Rate) on the Y-axis. TPR is a synonym for recall and is defined as follows: FPR is defined as…

-

Classification: Threshold value

Logistic Regression returns a probability, i.e between zero and one. We can return this probability the way it is (e.g. probability of a particular email being “spam” is 0.733) or we can convert it into a binary value like Email_spam (0 or 1). What do the probabilities indicate? If a Logistic Regression model returns a…

-

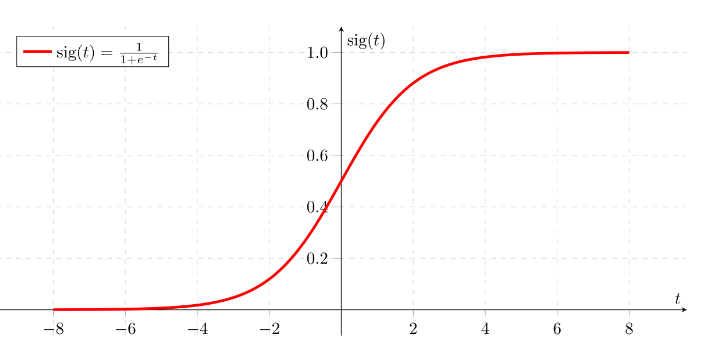

Logistic Regression

Logistic regression is used to calculate the probability of an outcome given an input variable. For e.g. probability of an email being spam or not spam. This model returns the probability of the outcome in a range of 0 to 1. We often use Logistic Regression combined with a threshold value in classification problems. Logistic…

-

Regularization: L1, L2, and Lambda

Regularization is a method to tackle overfitting models. Ridge (L1) and Lasso (L2) are two popular types of regularization techniques.

-

Feature Engineering

What are Features? In machine learning, the characteristics of the data (in other words, columns that influence the model) that are selected while training the model are termed Features. Choosing good features is crucial for the performance of models. What is Feature Engineering? In programming, we focus mainly on the code. However, in machine learning…

-

Validation, Testing, and Training Sets

My notes on the testing, training and validation sets used in machine learning

-

Gradient Descent

Gradient Descent is an iterative optimization algorithm used to find the local minima/maxima of a given function. It is used very frequently in Machine Learning. Its application in ML is to reduce the cost/loss function which helps in choosing an efficient model. For gradient descent, the function must be: differentiable: it must have a derivable…